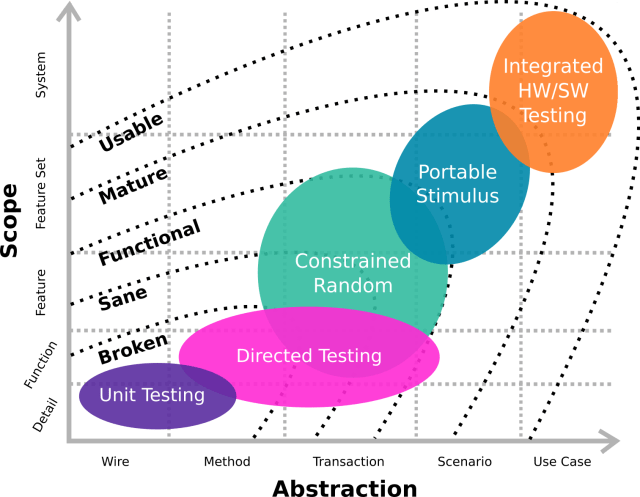

If you’ve been following my blog since DVCon earlier this year, you’ll have noticed that the introduction of portable stimulus has me thinking more in terms of integrated verification flows. Specifically, where our verification techniques are best applied and how they complement each other as part of a complete flow.

If you’ve been following my blog since DVCon earlier this year, you’ll have noticed that the introduction of portable stimulus has me thinking more in terms of integrated verification flows. Specifically, where our verification techniques are best applied and how they complement each other as part of a complete flow.

At DAC, I had an opportunity to summarize some of these ideas in a 30min presentation called Building An Integrated Verification Flow. That happened in the Verification Academy booth. Audience was small’ish at the conference, but the good news is all the sessions were recorded. So you can see Building An Integrated Verification Flow posted on the Verification Academy site.

For backstory, here’s a list of the relevant posts since Feb…

- Portable Stimulus And Integrated Verification Flows

- A Verification Where And How

- Verification That Flows

- Verification Planning From The Top Down

- 5 Steps In Design Maturity

- A Cure For Our Coverage Hangover

- Balancing Verification Development With Delivery

Still more to come on this topic of integrated verification flows so stay tuned!

-neil

Funny thing happened today.

Funny thing happened today. Is

Is  Verification engineers have a habit of over-engineering testbenches and infrastructure. We can all admit that, no? I can certainly admit it. From first hand experience, I can also admit that the testbenches I’ve over-engineered had a lot of waste build into them; unnecessary features I forced people to use and features that were never used at all. And there’s no good reason for it other than I like building new testbench features. Don’t think I’m alone there.

Verification engineers have a habit of over-engineering testbenches and infrastructure. We can all admit that, no? I can certainly admit it. From first hand experience, I can also admit that the testbenches I’ve over-engineered had a lot of waste build into them; unnecessary features I forced people to use and features that were never used at all. And there’s no good reason for it other than I like building new testbench features. Don’t think I’m alone there.